Gelare: Prototyping Platform for Assistive Robotics

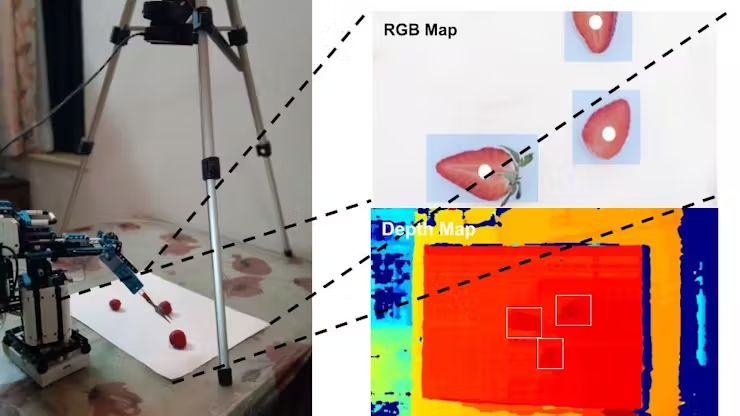

Bringing robotic manipulation performing real-time computer vision with RGBD sensors to an accessible platform for prototyping assistive-robotic applications.

TL;DR: The idea is to bring robotic manipulation performing real-time computer vision with RGBD sensors to an accessible platform for prototyping. For this, we quantized and deployed computer vision models using a CNN architecture built for embedded devices called FOMO (Faster Objects, More Objects) and got the ML model processing RGB and Stereo Depth data under 3Mb ram and 1mW power at 30FPS. Using this platform, we then developed an assistive-robotic application for robot assisted feeding.

| Resource | Link |

|---|---|

| Project Repository | GitHub |

| Project Details | Hackster.io |

Project Overview

The idea is to bring robotic manipulation performing real-time computer vision with RGBD sensors to an accessible platform for prototyping.

For this, we quantized and deployed computer vision models using a CNN architecture built for embedded devices called FOMO (Faster Objects, More Objects) and got the ML model processing RGB and Stereo Depth data under 3Mb ram and 1mW power at 30FPS.

Using this platform, we then developed an assistive-robotic application for robot assisted feeding.