In-Hand manipulation using RL

Demo for the Robotic Grasping and Manipulation Competition (RGMC) at ICRA 2025 from the Robotic Manipulation Club Caltech

| Resource | Link |

|---|---|

| Project Related repositories | github |

| Club website | website |

| Wandb report | report |

This project is a demo for the Robotic Grasping and Manipulation Competition (RGMC) at ICRA 2025 for the in-hand manipulation track. We were the first team of undergraduates competing in the competition this year. This project was built in under a month with a team of 5 students funded by the Robotic Manipulation Club Caltech.

I started the Robotic Manipulation Club at Caltech in the Fall of 2024 and we raised $8k to secure hardware and compute to train and deploy policies. The list of teams competing for the competition can be found here.

The details of the competition can be found here.

Overview of the competition

To give a quick technical overview, the RGMC In-Hand Manipulation Sub-Track is a robotics competition focused on autonomous dexterous manipulation using robot hands. Teams compete across two main categories:

Task A - Object Position Control

Teams manipulate cylindrical objects through precise 3D waypoint sequences within a 5cm³ workspace, tracked via AprilTags. Competition includes both speed-focused (A1) and precision-focused (A2) subtasks using known and unknown waypoints respectively.

Task B - Object Re-orientation

Teams perform in-hand rotation of labeled cube objects to match target orientations specified by face letters (A-F). Includes speed-focused (B1) and capability-focused (B2) subtasks with known and unknown orientation sequences.

Technical Requirements

- Fully autonomous operation (no teleoperation)

- Custom robot hands on stationary mounts (commercial, open-source, or custom designs)

- AprilTag-based object tracking via camera systems

- 3D printed competition objects (cylinders: 60-100mm diameter, cubes: 50-90mm) plus one novel YCB object

- ROS-based auto-evaluation system for real-time scoring

Scoring System

Ranking-based point system across all subtasks, with Task A worth 5 points per object type and Task B worth 10 points each. Teams are ranked by execution speed (A1, B1) or accuracy/completion rate (A2, B2).

The competition

For the in-hand manipulation build, we decided to go with the Leap Hand since it was cheap and we also had an extra one lying around in the lab. The only catch was that it was the left hand and not the right hand. From an RL perspective, this did not make much difference but required some modifications to the environment and urdf which brought a lot of bugs and took some debugging.

We decided to use OAK-D cameras for tracking since the SDK was simple to use and integrate with ROS. We did not really need a depth camera for this since the competition allowed the use of April Tags. Before the competition details were announced, we trained a lightweight keypoint detector and pose estimator by finetuning a ViT model.

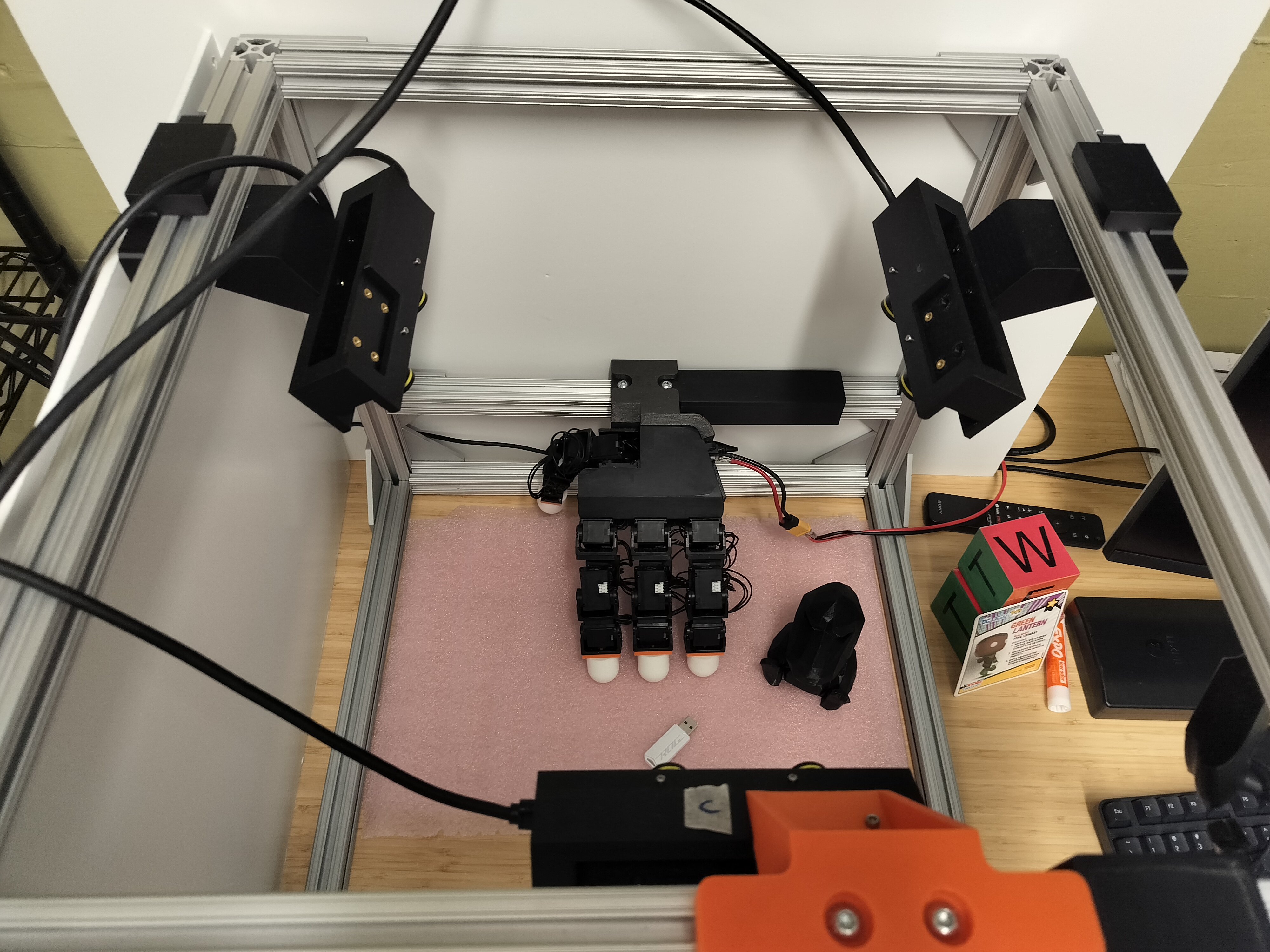

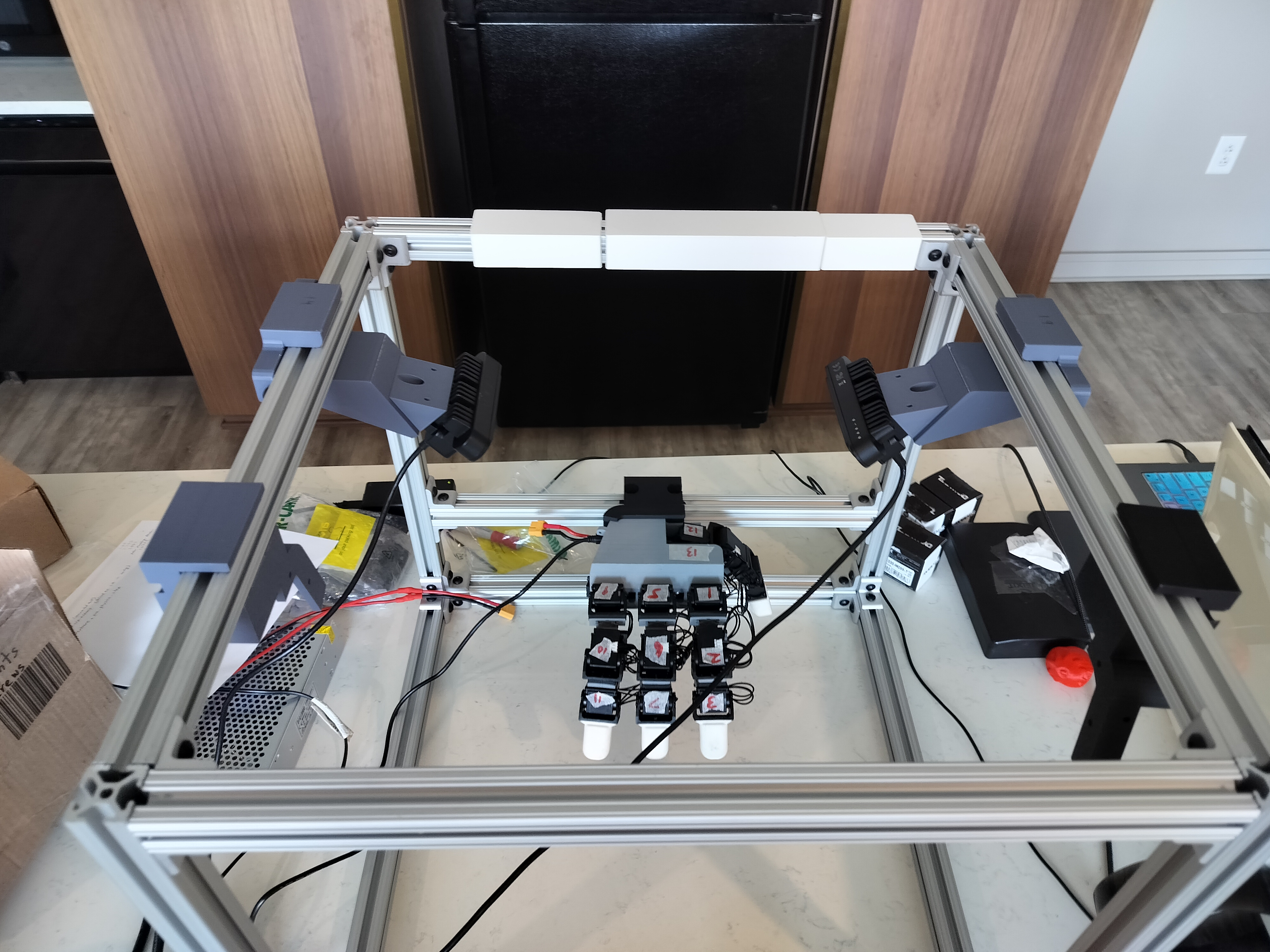

Some images of our build:

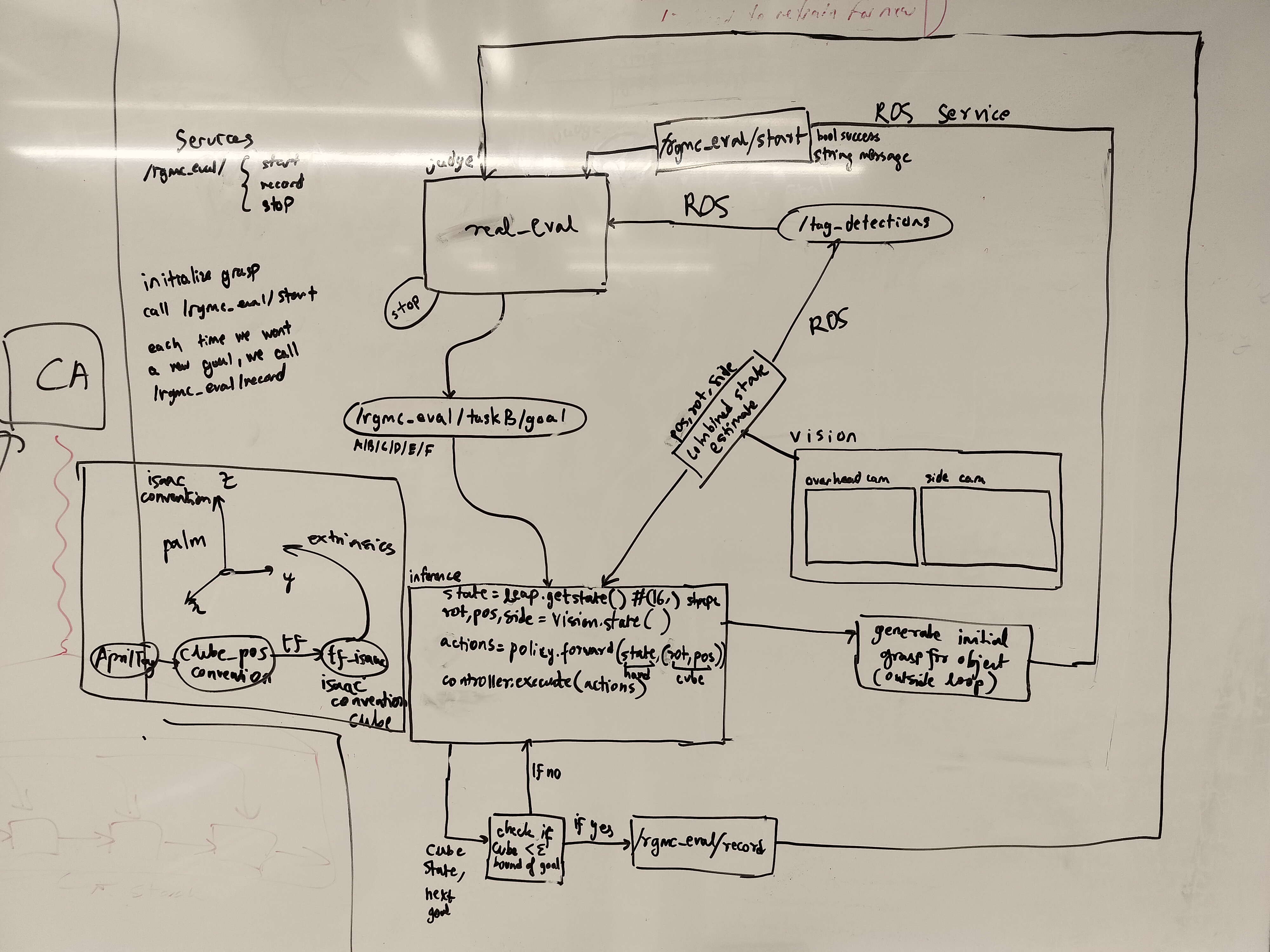

Here’s an image of our pipeline that we structured for on-hardware deployment:

Training Results and Analysis

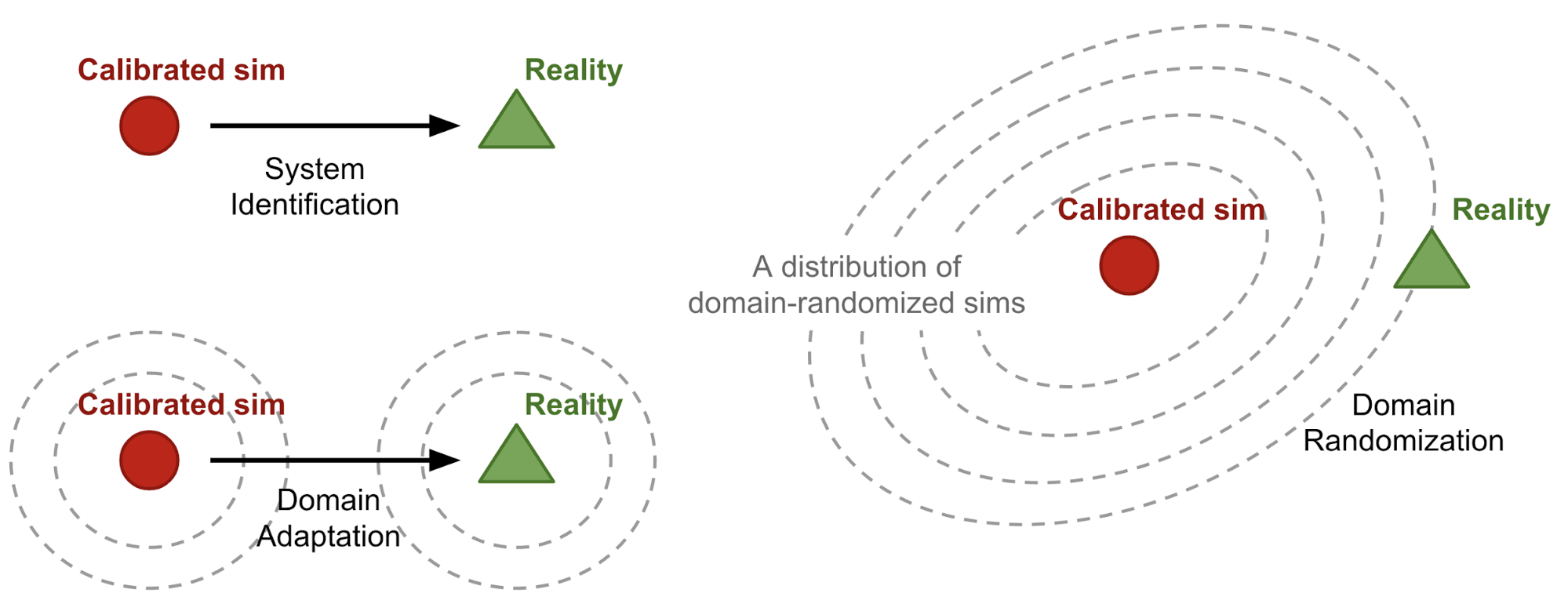

We used DeXtreme as our RL framework. DeXtreme is basically a sim-to-real approach for dexterous manipulation that uses Proximal Policy Optimization (PPO) with a ton of domain randomization to learn robust policies entirely in simulation that can then transfer to real hardware. Lilian Weng has this amazing blog post on domain randomization that I always point people to (I always think of domain randomization as expanding the distribution of the training data to a point where it coincides with the distribution of the real world). The figure below is from her post - it’s exactly what I picture when someone asks me about sim2real transfer. Really solid technical writing throughout. Highly recommend! :)

Back to DeXtreme, the task gets formulated as a discrete-time, partially observable Markov Decision Process (POMDP) where the agent interacts with the environment to maximize discounted rewards. We have a policy $\pi_\theta: \mathcal{O} \times \mathcal{H} \rightarrow \mathcal{A}$ that maps from observations and previous hidden states to actions. The action space is just PD controller targets for all 16 joints on the Leap Hand, which is way simpler than trying to do low-level motor control.

PPO works by optimizing this clipped objective function that prevents the policy from changing too drastically between updates: \(L^{CLIP}(\theta) = \hat{\mathbb{E}}_t \left[ \min\left( r_t(\theta) \hat{A}_t, \text{clip}(r_t(\theta), 1-\epsilon, 1+\epsilon) \hat{A}_t \right) \right]\) where $r_t(\theta) = \frac{\pi_\theta(a_t | s_t)}{\pi_{\theta_{old}}(a_t|s_t)}$ is the probability ratio and $\hat{A}_t$ are advantage estimates computed using Generalized Advantage Estimation. We use a discount factor of $\gamma = 0.998$ and clipping parameter $\epsilon = 0.2$.

For the network architecture, we went with an LSTM-based policy since the hand needs memory to keep track of what it’s been doing with the object. The policy network has an LSTM layer with 1024 hidden units plus layer normalization, followed by 2 MLP layers with 512 units each and ELU activation. The critic network is bigger - 2048 LSTM units - since it gets access to privileged state information during training that the policy doesn’t see, like exact fingertip forces and object dynamics.

The biggest change we made was to the reward function. The original DeXtreme paper trains policies for full 6DOF object reorientation, where you’re trying to match a complete target quaternion. But our competition only cared about which face of the cube was pointing up. So instead of computing rotational distance as the angle between two quaternions, we changed how $d_{rot}$ gets calculated.

Our modified reward function takes the target face (say face ‘B’ which corresponds to the +X direction in the cube’s frame), rotate its normal vector by the cube’s current orientation to get its direction in world coordinates, and then computes the angle between that and the global up vector $\hat{z} = [0, 0, 1]$. So our rotational distance becomes:

\[d_{rot} = \angle(\text{Rot}(\mathbf{q}_{obj}) \cdot \mathbf{n}_{facet}, \mathbf{\hat{z}})\]The full reward function we ended up with was:

\[R_t = \frac{w_{rot}}{|d_{rot}| + \epsilon_{rot}} + w_{pos} \cdot d_{pos} - P_{action} - P_{action\Delta} - P_{velocity} + B_{success} - P_{fall}\]where the positional term $d_{pos} = |\mathbf{p}{obj} - \mathbf{p}{goal}|_2$ keeps the cube in the palm, and we have the usual penalties for action magnitude, smoothness, and joint velocities. When the policy gets the target face within tolerance, it gets a big success bonus.

Since we modified the environment for the Leap Hand and changed the task requirements, our observation space was actually simpler than the original DeXtreme setup. The competition only told us which face should be pointing up, not full pose targets. Our actor observations included current cube pose (7D), target face orientation (4D), previous actions (16D), and hand joint angles (16D) for about 50 dimensions total. The critic got way more information including fingertip positions, velocities, forces, and all the domain randomization parameters.

We saw the most improvement using DeXtreme come from the domain randomization though. We randomized basically everything - physics parameters like mass, friction, and joint stiffness, observation noise and delays, action latency, even injecting completely random poses occasionally to make the policy robust to bad state estimates. The original paper uses “Vectorized Automatic Domain Randomization (VADR)” that automatically adjusts the randomization ranges based on how well the policy is performing.

Our best policy took about 6 hours to converge on 8 NVIDIA A40 GPUs, which was way faster than we expected based on the original DeXtreme timings. But we learned something interesting - we had to stop training right at convergence because the domain randomization was so aggressive that if we kept going, the policy actually started getting worse. It’s like the randomization became too extreme and prevented the policy from learning anything coherent.

The policy we ended up with achieved an average consecutive success rate of 40 iterations, meaning it could successfully reorient the cube to 40 different target faces in a row without dropping it. That was actually pretty decent for our first attempt at this, especially considering we were working with a left-handed Leap Hand which required some hacky environment modifications.

Below is our comprehensive training report from Weights & Biases showing the learning progress, hyperparameter optimization, and performance metrics for our in-hand manipulation policy:

Experiments

Let’s see how we did on the hardware after training our policy in simulation.

Once we had our checkpoint trained in Isaac sim using RL and domain randomization, we developed a vision pipeline to calibrate the cameras and then find the pose of the AprilTag with respect to the palm of the leap hand and also integrate an evaluator in the loop to check if the cube reaches the desired orientation and then move on to the next orientation to be achieved.

This pipeline was way simpler in simulation - Isaac Sim’s abstraction layer and ability to domain randomize helped quickly test and iterate and simulation but deploying on LEAP Hand turned out to be a bit of a challenge.

Here’s a video of our policy in action:

Since we were working on a 1 month sprint with midterms and travel, we couldn’t do a complete analysis on what was going wrong. At ICRA, the 3 days before the competition, we pulled a hackathon in our AirBnB and tried to get the policy working on hardware.

ICRA’25